Introduction

With the emergence of deep learning techniques and the availability of large-scale datasets, optical flow estimation performance has been significantly improved. However, recent work demonstrated that these networks are vulnerable to adversarial attacks like any other deep learning approach. Recent work [0,1,2] focuses on attacking optical flow networks with patches pasted onto the frames. These patch-based attacks cause occlusions on areas where the attack is placed, and motion boundaries, which degrades the performance of current optical flow estimators [3].

Self-driving cars use these models to estimate the motion of objects on the road and surroundings and use this information to decide on close-to-real-time actions/measurements. Attacking these networks means that the estimated motion could be completely wrong, affecting the decision process based on this information.

Animation from https://perceiving-systems.blog/en/post/the-road-to-safe-self-driving

What is Subset Scanning?

As our approach is based on Fast Generalized Subset Scanning [4], we wanted to do a quick review so easy to understand why the extensions provided in this paper are relevant to the patch-based attack problem. Subset scanning treats Neural Networks as data-generating systems and applies anomalous pattern detection methods to activation data. Subset Scanning efficiently searches over a large combinatorial space to find groups of records that differ the most from expected behavior. While existing works employed Subset Scanning to detect different types of OOD [5; 6; 7], we are the first to use it to (1) detect patch-based attacks, (2) on sequential images across temporal dimensions, (3) on networks for regression tasks.

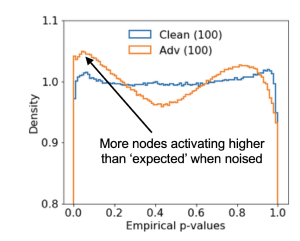

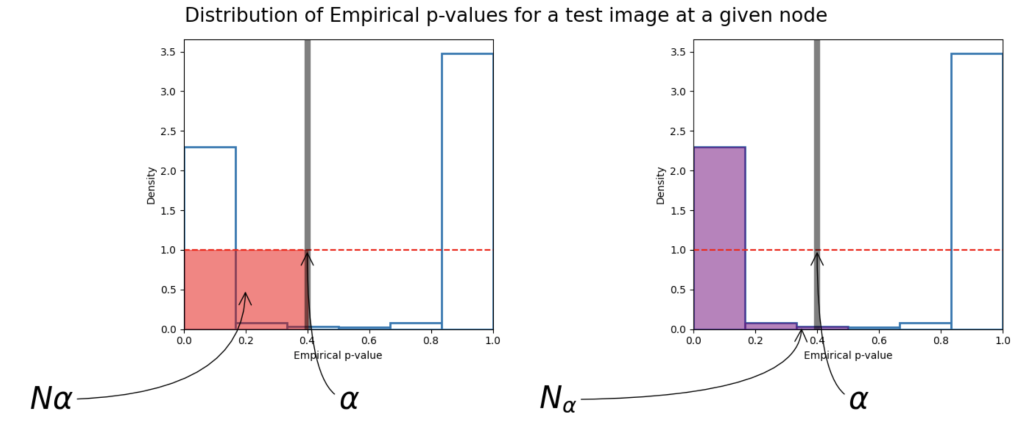

Our assumption conducting our experiments is that activations from patch-based attacked samples have a different distribution of p-values than clean samples. A p-value for us is the proportion of activations drawn from the same node for multiple clean samples greater than the activation from the evaluation sample.

How do we score one sample at a given node? We use scoring functions on an new set of frames to measure how much of the values deviate from uniform. Although Subset Scanning can use parametric scoring functions (i.e., Gaussian, Poisson), the distribution of activations within particular layers is highly skew ed and, in some cases, bi-modal. We use non-parametric Scan Statistics, a.k.a NPSS (checkout [4] for more deets), that make minimal assumptions on the underlying distribution of node activations and enable to scan across different types of layers.

ed and, in some cases, bi-modal. We use non-parametric Scan Statistics, a.k.a NPSS (checkout [4] for more deets), that make minimal assumptions on the underlying distribution of node activations and enable to scan across different types of layers.

Although NPSS provides a means to evaluate the anomalousness of a subset of activations for a given input, discovering which of the 2 to the J possible subsets provides the most evidence of an anomalous pattern, is computationally infeasible for large J, which is the case for the size of layers in deep learning models. That is why we need a priority function, in this case, the proportion of the values under a threshold. We have a guarantee that the subset maximizing the score will be one consisting only of the top-k highest priority records.

Spatially Constrained Search Space in Optical Flow Networks

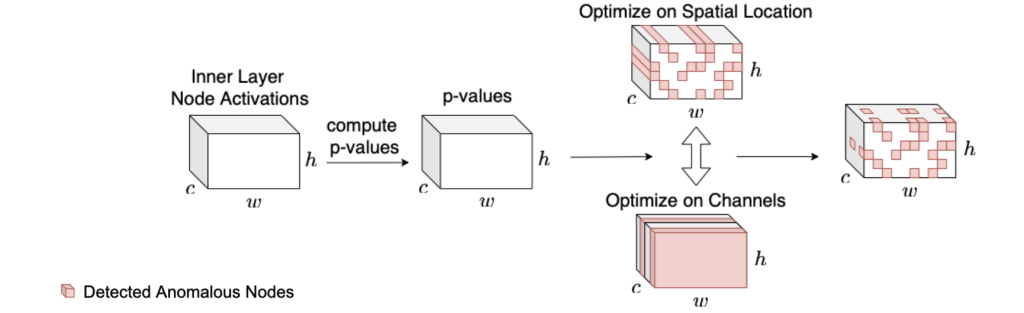

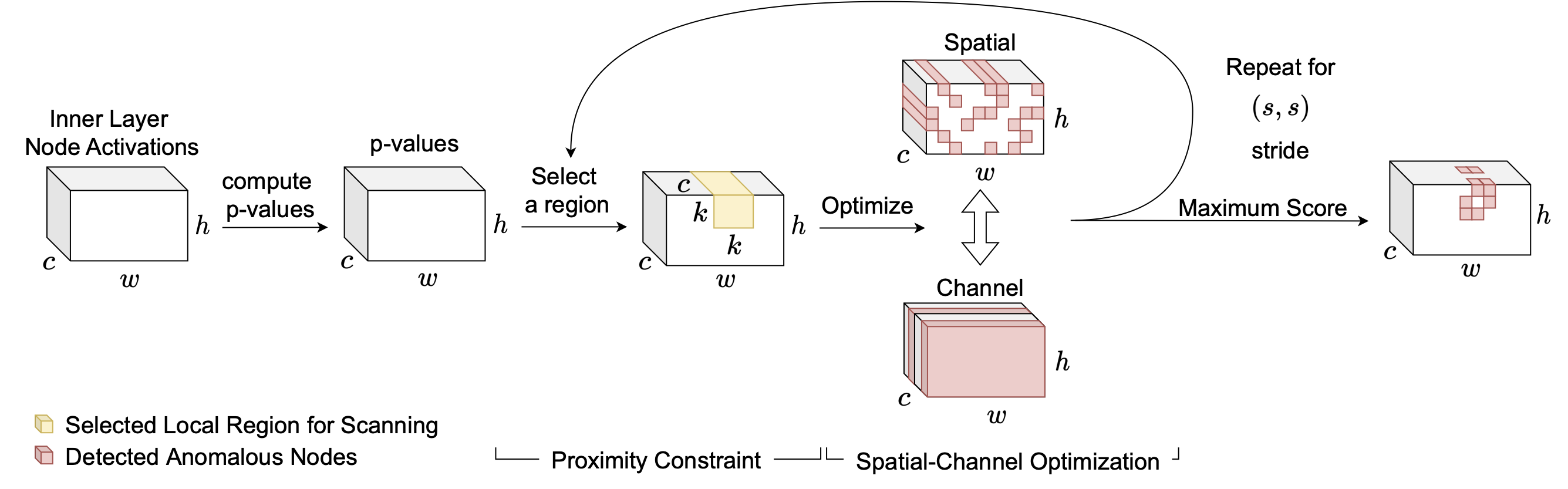

Previously, the vanilla approach [8] forced inclusion of all channels naively implies that all channels in the inner layer being scanned are impacted by a patch attack on an optical flow neural network. The more realistic expectation is that the attack may affect only some subset of the channels. By introducing Spatial-Channel Optimization (SCO) features, we are returning a subset of spatial locations crossed with a subset of channels. While SCO allows us to find an anomalous subset of node activations across spatial locations, these detected anomalous locations may be far apart, spanning the entire frame of the given test image pairs. We want to further constrain the search space for subset scanning so that the detected anomalous locations strictly come from a local spatial neighborhood. Thus, we enforce a proximity constraint where our optimization is only applied to the kxk spatial region (the yellow region in the figure below).

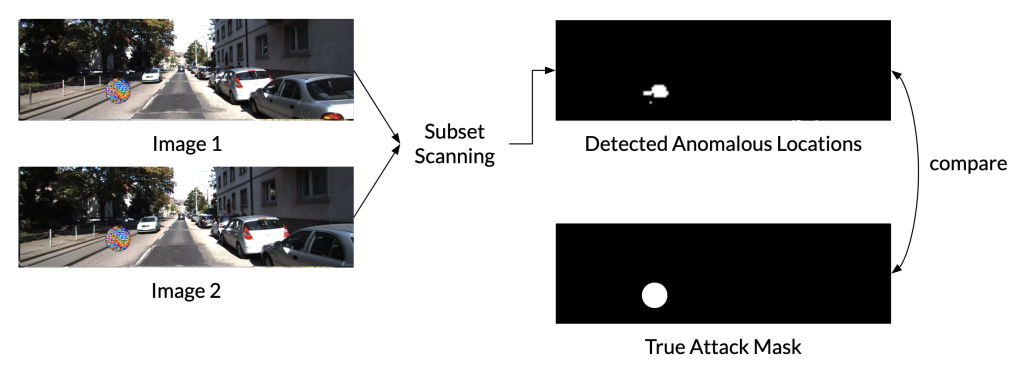

This will ensure that the detected subset of anomalous locations only comes from this spatial neighborhood. We do this for all kxk locations in the given locations and return the subset of p-values from a kxk neighborhood that yields the highest NPSS score. Given the subset of detected p-values from a kxk neighborhood, we can easily localize the attack by finding which locations these detected p-values occur in the inner layer feature map. These detected locations in the feature maps can be up-sampled, if needed, to match the resolution of the input frames, or vice versa. The localized attacks can be utilized for mitigation or to simply mask the area for downstream flow estimation.

Experimental Setup

Experimental Setup

We validate our approach using four state-of-the-art flow estimators. We choose the first layer in each network’s components when selecting inner layers to apply our proposed method. Specifically, we select the first layer of the encoder and decoder module and their correlation layer.

Datasets and Attacks

Following previous papers[0,1,2], we use KITTI 2015, raw KITTI, MPI-Sintel, and raw Sintel datasets. KITTI consists of road scene images with sparse optical flow labels (2015) and without labels (raw). MPI-Sintel contains 23 sequences from computer-animation short Sintel with flow labels. Its raw frames without labels have also been used in previous un- or semi-supervised flow estimators. We constructed patch-based adversarial attacks on the four flow networks. The figure below shows the change in end-point error with and without the adversarial patch attack. We use patches of four different sizes with respect to the input image resolution. As expected, we see worse performances as we increase the patch attack sizes. In terms of flow networks, these patch attacks harm the performance of FlowNetC the most and RAFT the least.

Results

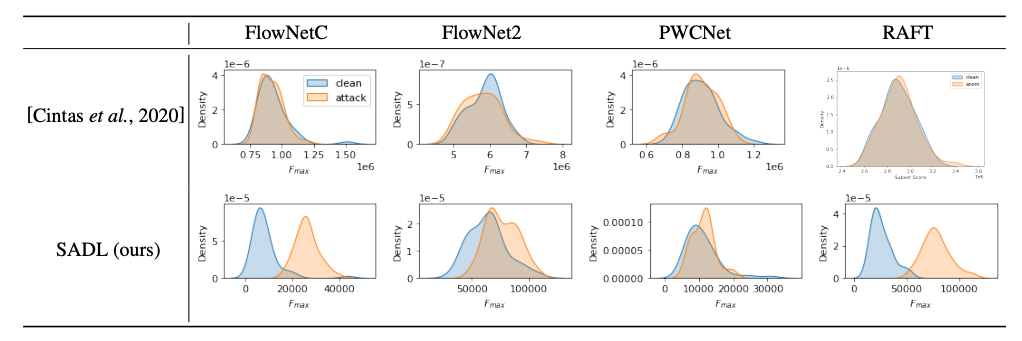

Overall, we see higher detection performance when we include spatial information, as when we lose spatial information, the method cannot detect the attacks across any model. This can be observed by looking at the score distributions of the clean (blue) and attacked (orange) test sets. The two distributions are more separable using SADL than the baseline. While our method shows clear improvements across multiple networks and datasets, both suffer on MPI-Sintel for FlowNetC and KITTI 2015 for PWCNet.

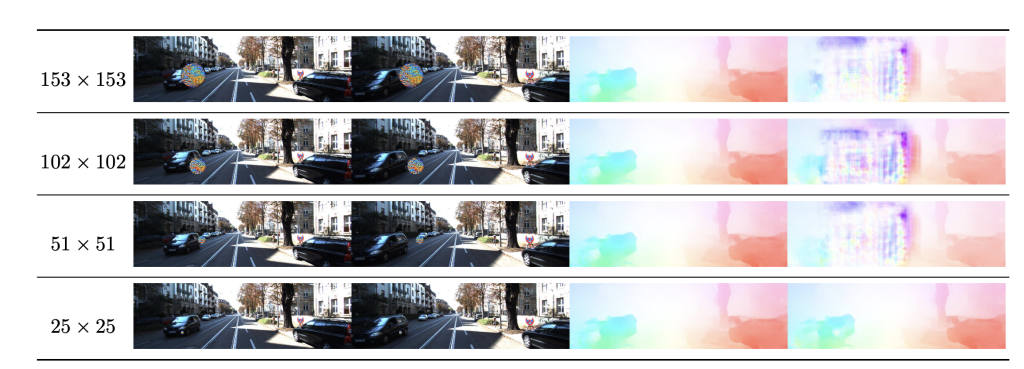

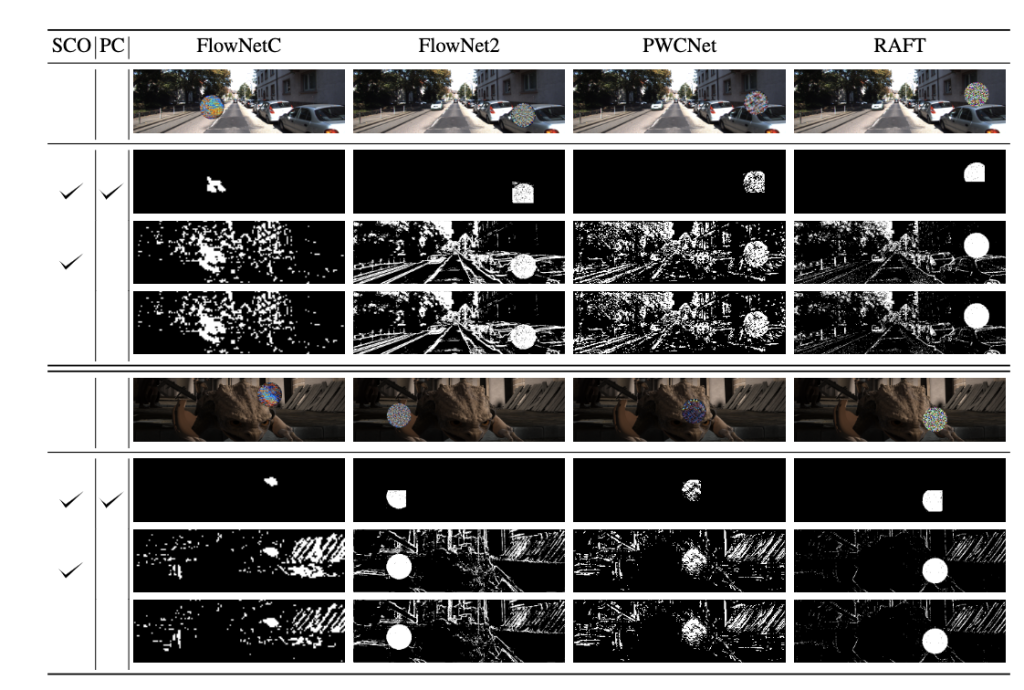

We can see an example visualization of a detected subset of anomalous locations in the feature space for the attacks of size p=153 for KITTI 2015 (top) and MPI-Sintel (bottom). We can successfully detect a subset of anomalous locations that align with the location of the patch attack.

Conclusion & Next Steps

We showcase how constrained search space can improve the detection and localization of patch-based adversarial attacks in optical flow estimators. We use a spatially constrained subset scanning on the inner layer in an unsupervised manner without any training or prior knowledge of the attacks. We further give insights into which layers are most affected by these attacks for various flow networks. The immediate next step could utilize detected and localized attacks to devise mitigation techniques for flow estimators. More details in this presentation.

Look out for our work @ IJCAI 2023

Wednesday, 23rd August at 11:45-12:45 Computer Vision (3/6) Session

#3357 Spatially Constrained Adversarial Attack Detection and Localization in the Representation Space of Optical Flow Networks. Hannah Kim; Celia Cintas; Girmaw Abebe Tadesse; Skyler Speakman.

References

[0] Ranjan, A., Janai, J., Geiger, A. and Black, M.J., 2019. Attacking optical flow. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 2404-2413).

[1] Schrodi, S., Saikia, T. and Brox, T., 2021. What causes optical flow networks to be vulnerable to physical adversarial attacks. arXiv preprint arXiv:2103.16255, 3.

[2] Schrodi, S., Saikia, T. and Brox, T., 2022. Towards understanding adversarial robustness of optical flow networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 8916-8924).

[3] Kim, H.H., Yu, S. and Tomasi, C., 2021. Joint detection of motion boundaries and occlusions. The British Machine Vision Conference (BMVC), 2021.

[4] McFowland, E., Speakman, S. and Neill, D.B., 2013. Fast generalized subset scan for anomalous pattern detection. The Journal of Machine Learning Research, 14(1), pp.1533-1561.

[5] Cintas, C., Das, P., Quanz, B., Tadesse, G.A., Speakman, S. and Chen, P.Y., 2022. Towards creativity characterization of generative models via group-based subset scanning. IJCAI 2022.

[6] Kim, H., Tadesse, G.A., Cintas, C., Speakman, S. and Varshney, K., 2022, March. Out-of-distribution detection in dermatology using input perturbation and subset scanning. In 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI) (pp. 1-4). IEEE.

[7] Akinwande, V., Cintas, C., Speakman, S. and Sridharan, S., 2020. Identifying audio adversarial examples via anomalous pattern detection. arXiv preprint arXiv:2002.05463.

[8] Cintas, C., Speakman, S., Akinwande, V., Ogallo, W., Weldemariam, K., Sridharan, S. and McFowland, E., 2021, January. Detecting adversarial attacks via subset scanning of autoencoder activations and reconstruction error. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence (pp. 876-882).